Increasingly we use our biometric data to power the tech we use in our day to day lives. From using our face to unlock our smart phones to using our fingerprints for air travel, technology has enabled us to track and survey peoples at an alarming rate. Often in ways that are invisible to us and without our consent.

However, survelliance and its problematic relationship to technology is not new. Since the launch of Amazon's facial recognition software - Rekognition - it has been subject to severe questioning. Especially as Amazon heavily targeted law enforcement as a client for Rekognition.

ACLU took Amazon's facial recognition tech—which Amazon is aggressively selling to police—and loaded it with 25,000 mugshots. Then, they ran photos of members of Congress against the mugshots.

— Trevor Timm (@trevortimm) July 26, 2018

They got 28 matches, 40% of them Congressmen of color. https://t.co/Wix11Z3poY

In 2018, the ACLU raised several questions about the technology. One of the questions focused on how the system was built upon problematic data, namely a database of 25,000 public arrest photos.

Datasets like mugshots are widely known to be biased as they do not accurately reflect the makeup of the broader United States population. Specifically this data overrepresents BIPOC people. Also, the image quality of mugshots themselves are suspect and can often be of poor qaulity. Combined with other problems, like a higher false positive rate identifying BIPOC as offenders and general problems of low accuracy the use of facial recognition in policing is again in the public limelight. The renewed focus on the Black Lives Matter movement and police brtuality has led to big tech companies like IBM, Amazon, and Microsoft stepping back - albeit temporarily - from selling facial recognition for law enforcement. While facial recognition technology may be temporarily unavailable to law enforcement, there are several other questions we as technologists should be grappling with. The availability of a technology is only a part of the discussion, the other problems to be discussed lies in the application of how this technology will be used.

How facial recognition technology can be weaponized by policing, this will not be a uniform experience to all people. It will be informed by our social location - our race, our gender, our age, our area of residence.

Chicago: A Segregated City

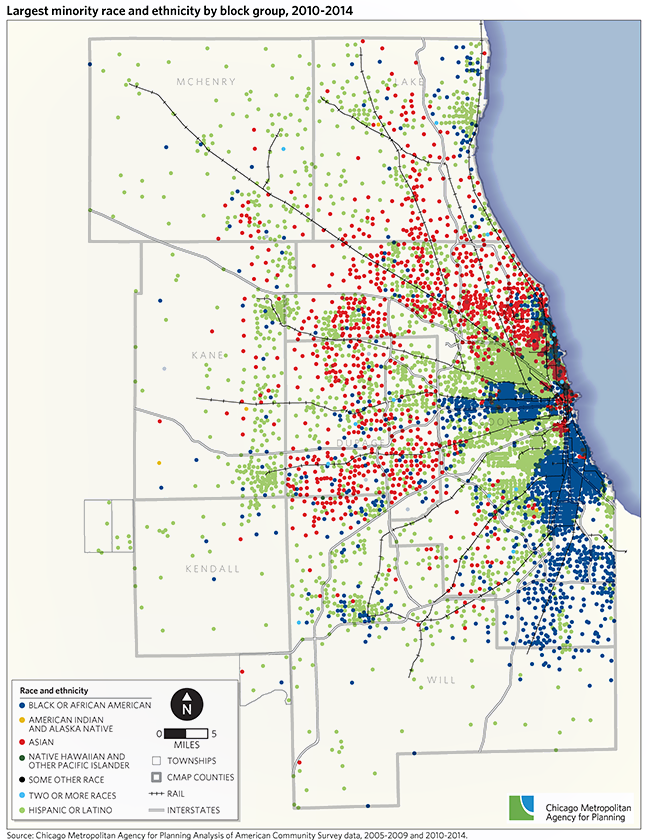

Take Chicago as an example. Labeled at times the United State's most segregated city, this 2015 CMAP built with 2010 - 2014 American Community Survey data shows us with data just how segregated the city it.

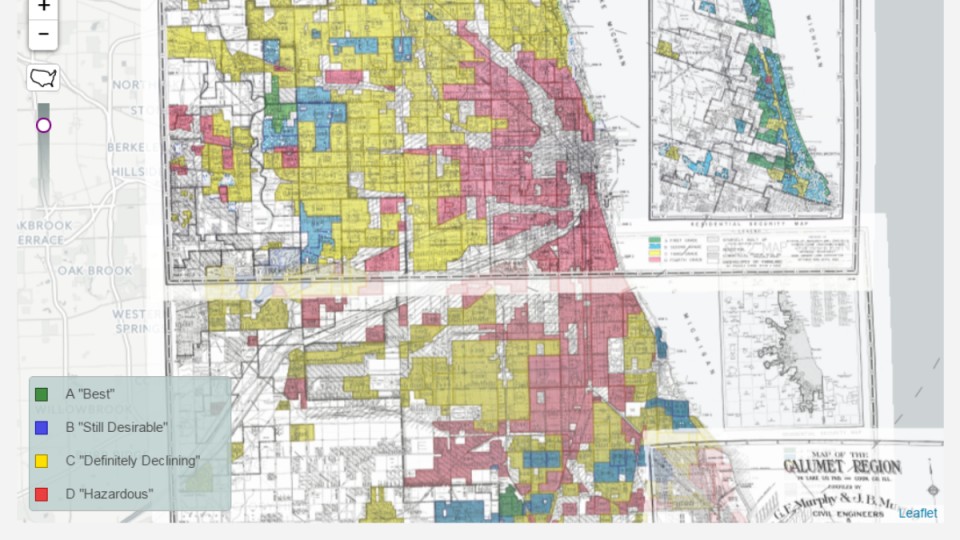

The segregated landscape of Chicago is no accident. Instead we can understand Chicago as the result of decades, even centuries, of systemic racism and oppression. One policy that clearly demonstrates the intentional remapping of Chicago's communities can be seen in redlining. As a fiscal policy, redlining operated by labeling Chicago community areas on a scale from the "best" places for investment to the worst, or most "hazardous", places for investment.

Throughout the 1930s and 1940s the federal Home Owners' Loan Corporation, backed by the Federal Housing Authority (FHA), used this practice of labeling communities of color - largely Black communities - overwhemlingly as red, or "hazardous". The "hazardous" labeling meant that in these areas loans were not extended. As these loans were backed by the FHA, the loans had a low interest rate and required a smaller down payment for purchasing a home. Additionally these loans became the economic catalyst used by white America to finance their dreams of sending their children to college. Redlining is but one example demonstrating how divestment, informed by race and other socioeconomic factors, had the intended result of moving wealth out of BIPOC communities. Wealth that, while moving out of BIPOC communities, was concentrated in white communities.

My Chicago is not your Chicago

Systemic racism however is not only experienced in housing. Policing is intrinsically connected to housing as is wealth. Areas where police are deployed, or are not deployed, the way that policing happens; how one person experiences policing is greatly informed by the hyperlocal conditions on the ground as well as one's identity.

Some quick stats:

- Chicago's South and West sides are the community areas with the highest homicide rates<

- Most of the arrests in a given year are BIPOC<

- Most police deployed in the highest crime Chicago community areas are also the most inexperienced

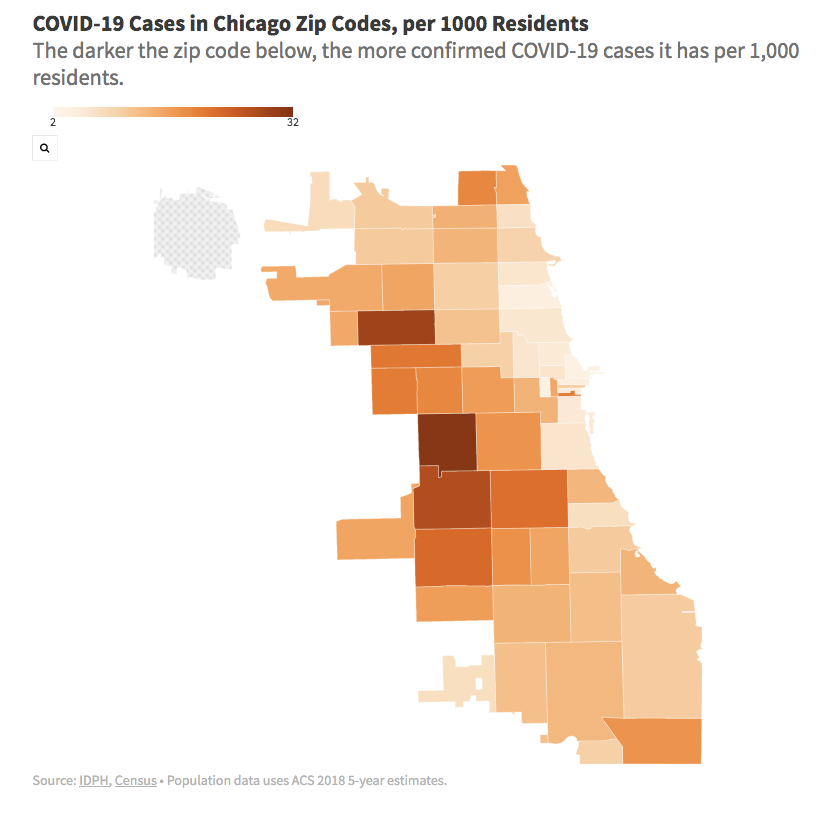

Additionally, the areas hardest hit by COVID19 are overwhemingly BIPOC communities.

So why should we care about systems of oppression?

When we as technologists build tools, we are not building technology in a vacuum. We do not build them devoid of the bias in the world around us. Nor do we fully have control over the way our tools will be employed. It is our moral imperative to question not only how our technology is built, but why we need it. If the tools we create are based on the social landscape we live in, our tools merely "codigy the past". That is, we as technologists should not presume that our techology can, "invent the future" as author and activst Cathy O'Neil warns us. "Doing that requires moral imagination, and that's something only humans can provide".

I am calling on you all to take time to educate yourself and understand how technology intersects with bias, with systems of power, and systems of inequity. I've started a bias and equity in technology reading list that I will be reading through as well as soliciting feedback on.

If you have a suggestion that should be added to the list you can add 👉 here.

We all have to do the work. To show up. To listen. To learn. To stay engaged. Will you join me?